In today’s rapidly evolving technological landscape, the adoption of AI-powered tools like ChatGPT has proven to be both a blessing and a curse for companies across various industries.

A recent incident at Samsung Electronics, reported by The Economist Korea, has brought to light the potential risks associated with using AI chatbots like ChatGPT. In three separate incidents, employees inadvertently exposed sensitive corporate information by inputting data into ChatGPT, which was then stored on external servers, beyond the company’s control.

This cautionary tale highlights the ever-present cybersecurity challenges that businesses face, even while utilizing cutting-edge AI technologies.

In this post, we will look at the challenges that companies, both large and small, may encounter when using tools like ChatGPT. More importantly, we will share valuable insights and practical tips to help you safeguard your organization’s sensitive data and avoid similar mishaps, ensuring a responsible and secure AI adoption journey.

A Snapshot of ChatGPT’s Rising Stardom and the Cyber Threats Lurking Behind

Businesses of all sizes are eager to integrate AI into their operations, for obvious reasons. ChatGPT, an advanced language model developed by OpenAI, has the remarkable ability to understand context, generate human-like text, and respond intelligently to user inputs. Its potential business applications are nearly limitless.

However, as Spider-Man’s Uncle Ben wisely said, “With great power comes great responsibility.” The rapid adoption of AI technologies like ChatGPT presents an array of potential cyber threats that businesses must address. According to recent studies by McKinsey and others, AI-powered cyberattacks are becoming more sophisticated and harder to detect, posing significant risks to businesses of all sizes.

So, what steps can you take to protect your data?

A Little Background on ChatGPT

ChatGPT and its Capabilities

ChatGPT, a state-of-the-art language model developed by OpenAI, is one of a series of GPT (Generative Pre-Trained Transformer) tools that has been making waves in the AI landscape. It uses advanced machine learning techniques—particularly deep learning and natural language processing—to understand context and generate human-like text based on the inputs it is given. Its capabilities include answering questions, writing high-quality content, generating code, and even engaging in simulated conversations.

The astounding capabilities of ChatGPT are due to its training on vast amounts of text data, which allows it to generate coherent, contextually relevant, and creative responses. According to OpenAI, ChatGPT-3’s word choice modeling is based on 175 billion configuration variables, or parameters, making it one of the largest and most powerful AI models to date. For ChatGPT-4, the number of parameters is expected to be much higher, possibly 100 trillion or more.

AI’s Growing Role in Industry

AI technologies, including ChatGPT and other advanced models, are becoming increasingly important tools across a wide range of industries. In fact, a recent projection by PwC states that AI adoption could contribute as much as $15.7 trillion to the global economy by 2030. AI’s Growth Breakdown by Sector

Healthcare: AI is being used to improve diagnostics, predict patient outcomes, and develop personalized treatment plans. According to Accenture, AI applications in healthcare could create $150 billion in annual savings for the U.S. healthcare economy by 2026.

Finance: AI plays a vital role in fraud detection, credit risk assessment, and algorithmic trading. A report by Autonomous Research estimates that AI technologies could lead to a 22% cost reduction in financial services by 2030, equating to $1 trillion in savings.

Retail: The retail industry is undergoing a revolution thanks to the impact AI is having on personalized recommendations, inventory management, and supply chain optimization. According to a report by Capgemini, retailers implementing AI could save up to $340 billion by 2022.

Manufacturing: AI-powered predictive maintenance, quality control, and production optimization are transforming the manufacturing sector. McKinsey estimates that AI-driven improvements in manufacturing could generate up to $3.7 trillion in value by 2025. Source: McKinsey & Company, “AI in Production: A Game Changer for Manufacturers.”

As businesses continue to integrate AI solutions into their workflows, it becomes crucial to understand the potential cyber risks of AI, and to implement strategies to mitigate those risks.

Potential Cyber Threats Associated with AI Adoption

Data Breaches and Sensitive Information Exposure

As businesses adopt AI technologies like ChatGPT, they must be wary of the potential for data breaches and exposure of sensitive information. AI systems often require access to large datasets, some of which may contain confidential or proprietary data. Inadequate security measures can lead to devastating consequences, including financial loss and reputational damage.

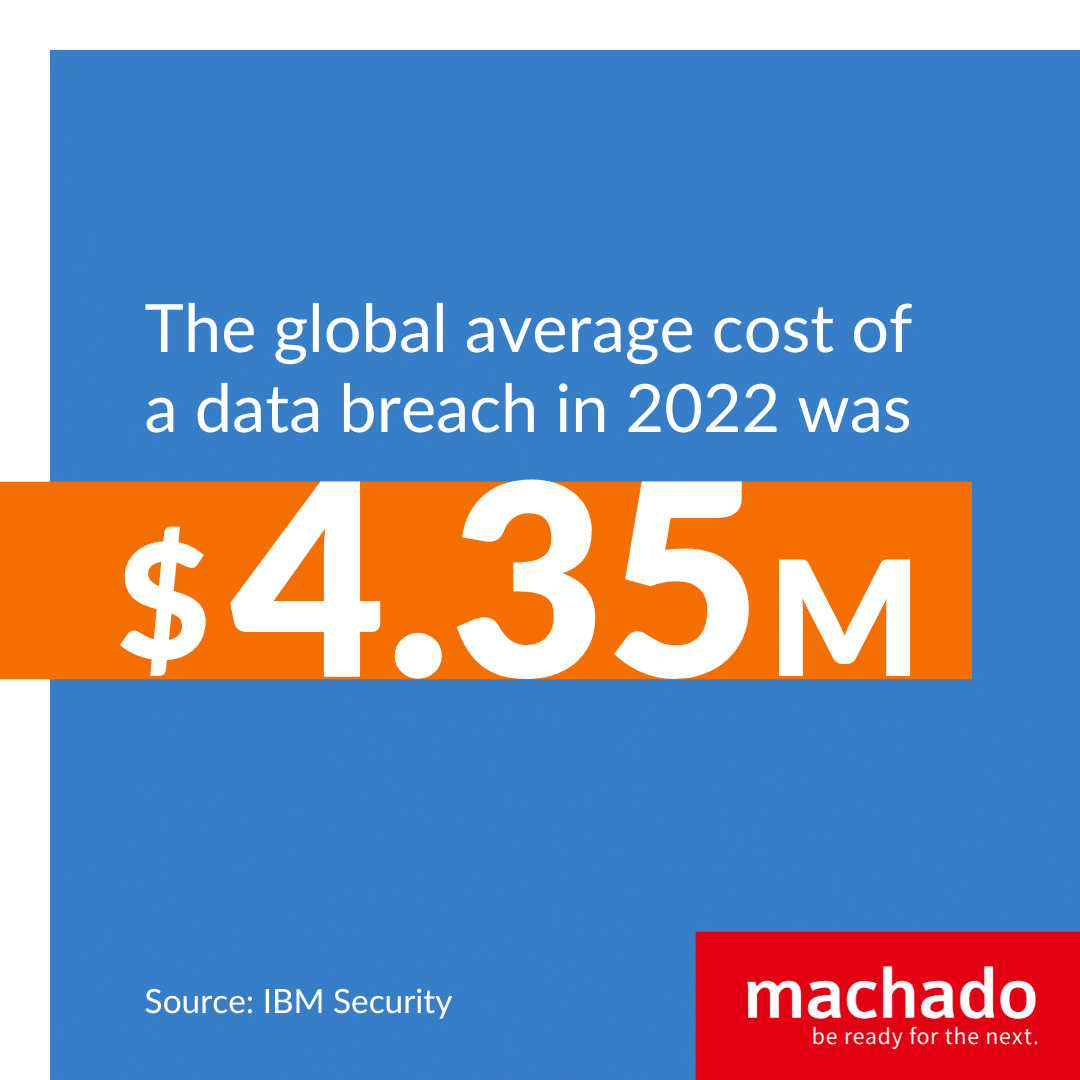

For instance, according to a report by IBM Security, the global average cost of a data breach in 2022 was $4.35 million, while the average cost in the U.S. was a staggering $9.44 million.

In addition to direct financial losses, businesses may also face regulatory penalties for failing to protect sensitive information. The implementation of strict data protection regulations, such as the EU’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), highlights the growing importance of data privacy and security in the age of AI.

Malicious Use of AI for Cyberattacks

AI technologies can be a double-edged sword: while they offer immense benefits, they can also be exploited by cybercriminals to launch sophisticated attacks. ChatGPT and similar AI models could be used to create highly convincing phishing emails or craft personalized social engineering attacks, making it even more challenging for individuals and organizations to identify and prevent these threats.

A study by the Center for a New American Security (CNAS) found that 87% of cybersecurity experts surveyed believe that AI and machine learning technologies will be used to power cyberattacks in the next three years. Source: CNAS, “The Malicious Use of Artificial Intelligence in Cybersecurity.”

Insider Threats and Human Factors

The integration of AI technologies like ChatGPT into business processes creates new vulnerabilities that can lead to cybersecurity incidents. Employees who misuse or mishandle AI tools, either intentionally or unintentionally, can expose the organization to a range of risks, including data breaches, intellectual property theft, and non-compliance with regulations.

The Ponemon Institute’s “2022 Cost of Insider Threats Global Report” found that insider-related incidents have doubled since 2020, and that 56% of those occurrences were due to negligence.

To mitigate these potential cyber threats associated with AI adoption, businesses must implement robust security measures, establish clear usage policies, and invest in employee training to raise awareness of the risks and best practices in handling AI technologies.

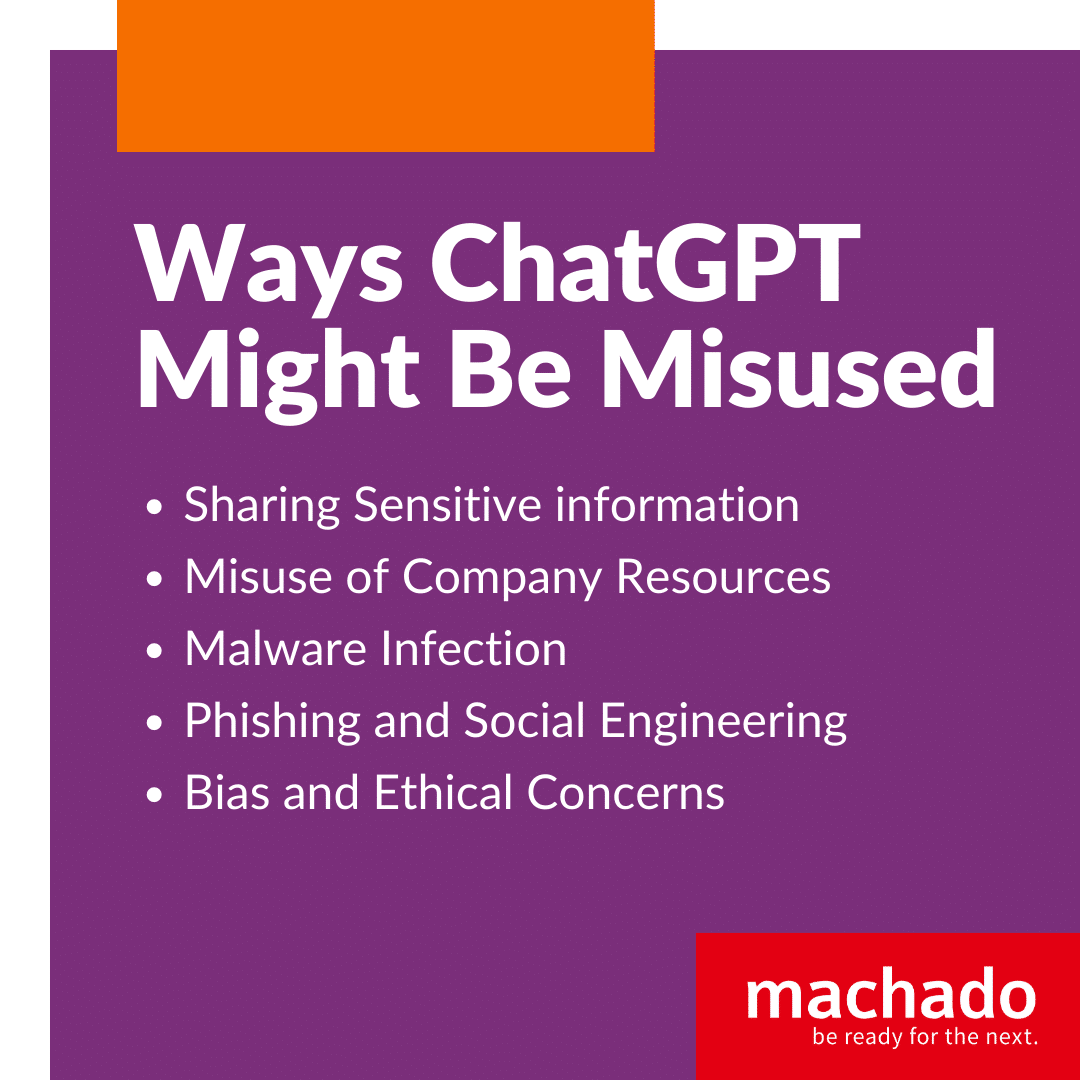

Ways ChatGPT Might Be Misused

AI tools like ChatGPT can boost productivity and reduce time wasted on repetitive tasks, but those improvements come at a cost. Let’s explore a few of the potential problem spots.

Sharing Sensitive information

One potential risk associated with using ChatGPT is the inadvertent sharing of sensitive information. Employees might unknowingly expose confidential data, such as trade secrets or customer details, while interacting with the AI system. In multiple studies, well over half of all employees reported having accidentally shared sensitive information with unauthorized individuals. Weakening authentication measures

Another risk is the weakening of authentication measures by using ChatGPT to generate or bypass passwords, tokens, or other security credentials. This could lead to unauthorized access to sensitive systems or data, potentially resulting in data breaches or other cybersecurity incidents. In 2019, Verizon’s Data Breach Investigations Report showed that 80% of data breaches were caused by weak or stolen passwords.

Misuse of Company Resources

ChatGPT is a fun and amazing tool, so employees might misuse ChatGPT for personal purposes or other activities unrelated to their job responsibilities. This could result in a waste of company resources, and depending on what they are doing, could introduce potential legal or ethical concerns. One recent study pegged the percentage of employees who admitted to using company resources for personal purposes, but does anyone really believe the number is that low?

Malware Infection

In some cases, employees could inadvertently expose their organization to malware infection by downloading or interacting with malicious ChatGPT models or applications. According to the 2020 Internet Security Threat Report by Symantec, successful malware attacks cost businesses an average of $2.6 million per year.

Phishing and Social Engineering

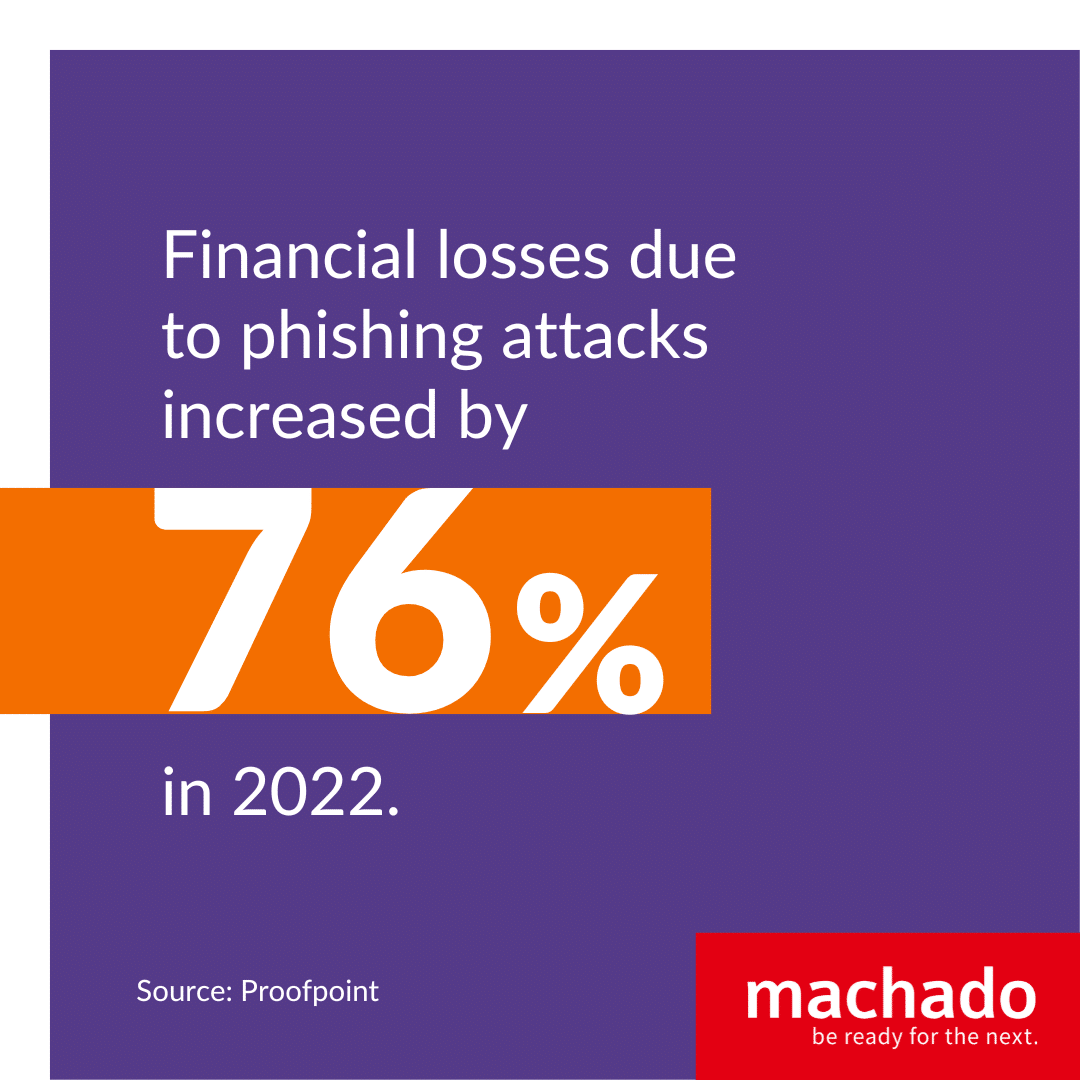

Cybercriminals could exploit ChatGPT to create sophisticated phishing emails or other social engineering attacks targeting employees. According to a report by Proofpoint, financial losses due to phishing attacks increased by 76% in 2022.

Bias and Ethical Concerns

Lastly, employees using ChatGPT might inadvertently contribute to the propagation of biased or ethically questionable content due to the inherent biases present in AI models, or in the information they are processing. A study by the AI Now Institute found that biased AI systems can lead to unfair representations of individuals or groups, potentially resulting in legal and reputational consequences for the organization. Understanding and addressing these potential misuse scenarios will go a long way toward ensuring that your business gets all the benefits that come with AI adoption, while avoiding as many pitfalls as possible.

How to Mitigate Cyber Risks in AI Adoption

We don’t want to be all doom and gloom about AI—it has a lot of potential to propel businesses forward. With a clear usage policy in place with the following as a starting point, you can feel more at ease letting your employees leverage ChatGPT and other AI tools.

1. Establish Clear Usage Policies

One of the most critical steps in mitigating cyber risks associated with adoption of an AI tool like ChatGPT is to establish clear usage policies for employees. These policies should cover such aspects as:

- Purpose and scope: Define the specific business uses for ChatGPT and make certain that employees understand the contexts in which it is appropriate, and those in which it is not.

- Data handling: Create guidelines governing the types of data employees can input into ChatGPT, emphasizing the importance of protecting sensitive or confidential information.

- Access control: Specify the employees or teams that are authorized to access ChatGPT and implement role-based access controls to prevent unauthorized usage.

- Ethical considerations: Establish guidelines for using ChatGPT ethically, addressing potential bias and the generation of inappropriate or offensive content, and implementing a review process to screen for problematic material.

- Monitoring and auditing: Use a monitoring system to track ChatGPT usage, and perform periodic audits to ensure compliance with established policies.

No governing system is foolproof, and safeguards should be reviewed periodically as AI capabilities and usage evolve. But providing a well-defined framework for AI usage within your organization can go a long way toward minimizing the likelihood of misuse and the risk of security breaches.

2. Provide Employee Training and Raising Awareness

Before your business starts utilizing Chat GPT or other AI technologies, proper employee training and awareness is a must. Consider the following strategies:

Develop a comprehensive training program: Create a training program specifically tailored to address the unique risks and challenges associated with AI adoption for your business, including data security, ethical concerns, and potential misuse scenarios.

Encourage continuous learning: As AI technologies continue to evolve, it is important that your employees who are using AI stay informed of new developments, risks, and best practices. Provide regular updates, workshops, or webinars to maintain awareness.

Test yourselves with simulations and exercises: Simulate real-life scenarios, such as phishing attempts or social engineering attacks using AI-generated content, to help employees learn to recognize potential threats and respond to them effectively.

Provide resources and support: Offer resources like guidelines, checklists, and FAQs to help employees navigate AI usage responsibly and securely. Encourage employees to seek assistance from designated support personnel if they encounter any issues or uncertainties.

3. Implement Robust Security Measures

To safeguard against the potential risks associated with AI adoption, it is imperative that businesses implement robust security measures, such as:

Data protection: Use encryption, access controls, and regular backups to protect sensitive data used by ChatGPT and other AI systems. Ensure compliance with relevant data protection regulations, such as GDPR and CCPA.

Secure AI infrastructure: Implement strong security measures for the AI infrastructure, including firewalls, intrusion detection systems, and regular vulnerability assessments. Keep all AI software and tools up to date with the latest security patches.

Monitoring and incident response: Develop a monitoring strategy to detect unauthorized or suspicious AI usage and establish an incident response plan to address potential security breaches or misuse quickly and effectively.

Vendor assessment: Thoroughly evaluate your AI vendors and providers to ensure they adhere to best practices in security, data privacy, and ethical AI development. Make sure that your contracts establish clear contractual obligations regarding data protection and security.

Network segmentation: Create segregations in your network to limit the potential damage from a breach or compromise. Restrict the access that AI systems have to sensitive data and systems.

Wrap Up

Recap of Key Points and Takeaways

In this blog post, we have explored the potential cyber threats associated with AI adoption, specifically focusing on ChatGPT. We have discussed some ways AI might be misused, and the potential security risks associated with these technologies. To mitigate these risks, we have provided guidelines for establishing clear usage policies, providing employee training and raising awareness, and implementing robust security measures.

Importance of Responsible AI Adoption and Cybersecurity Best Practices

The rapid adoption of AI technologies like ChatGPT offers significant benefits to businesses in many industries. However, it is crucial that the potential cyber risks associated with AI adoption be recognized and that proactive steps are taken to guarantee the responsible and secure use of these technologies. By implementing the best practices outlined in this blog post, businesses can safeguard their sensitive data, maintain compliance with industry regulations, and protect their reputation in the face of an ever-evolving threat landscape.

Next Steps

Are you prepared to harness AI’s potential while ensuring the safety and security of your business? Our team of experts can support you in navigating the complexities of AI adoption and tailor the best cybersecurity practices to meet your organization’s unique needs.

Don’t wait for a cyber incident to jeopardize your business – contact us today for a comprehensive assessment. We look forward to guiding you towards a secure and successful AI integration.

Download our free guide on staying protected from ransomware.Be Ready for the Next Cyberattack